Innovation Scouting Resources and Know-How

Learn from experts we meet, our users and general topics around helping you find innovation.

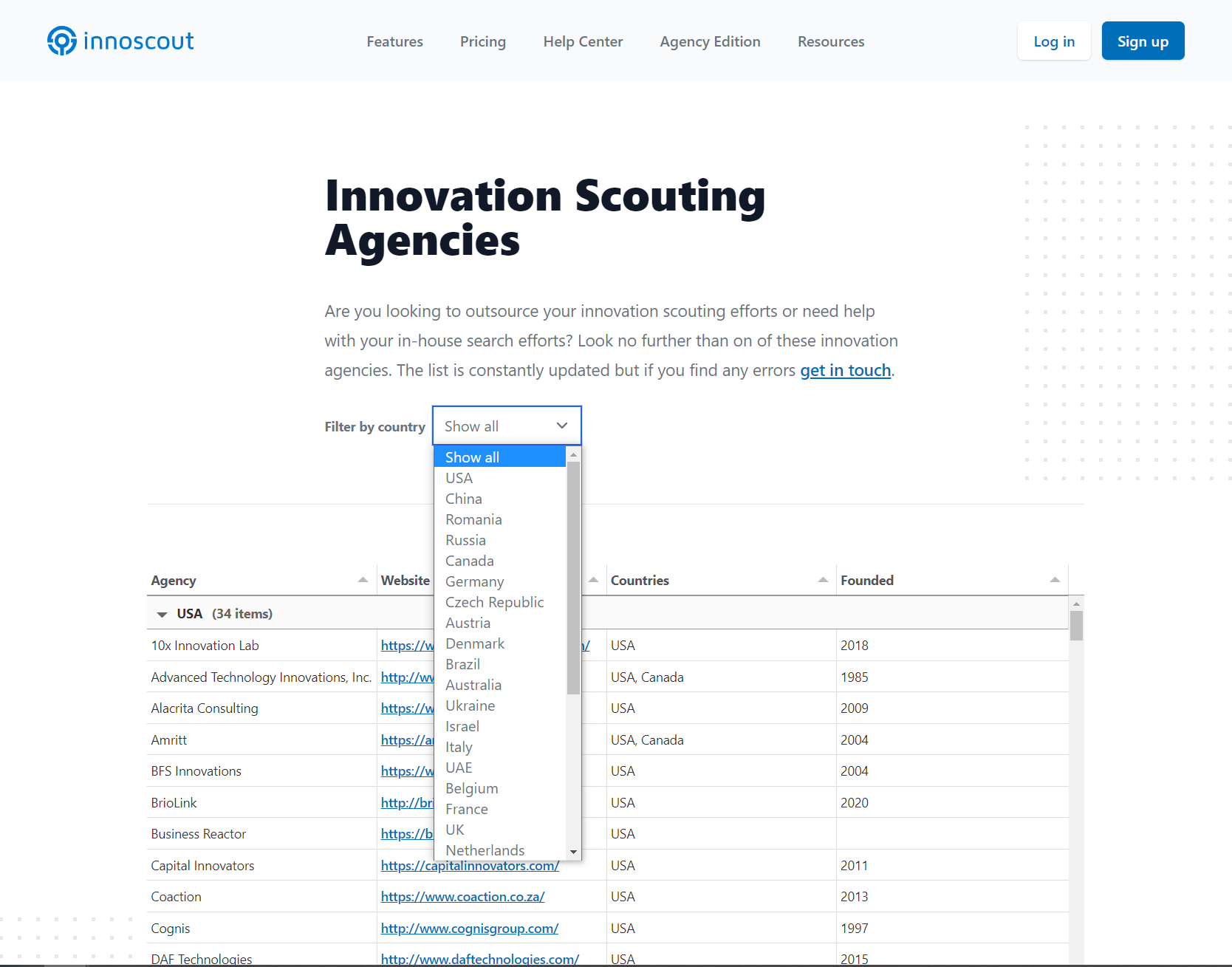

Looking for an innovation scouting agency?

Get expert know-how or even outsource your innovation discovery process to a set of experts in your area.

Take a look at our database of innovation agencies